Machine Learning Model Evaluation Metrics: 17 Practical Wins from Real Projects

When I built my first machine learning model, the accuracy was close to 94%.

I was proud. The notebook looked clean. The metric looked impressive.

Then the model went into a real environment—and failed badly.

That experience taught me a hard lesson: model evaluation metrics are not numbers to impress people; they are tools to protect you from bad decisions.

This guide is written from that mindset.

Not as a syllabus.

Not as an interview cheat sheet.

But as a practical, engineer-focused explanation of machine learning model evaluation metrics—the way they actually behave in real projects.

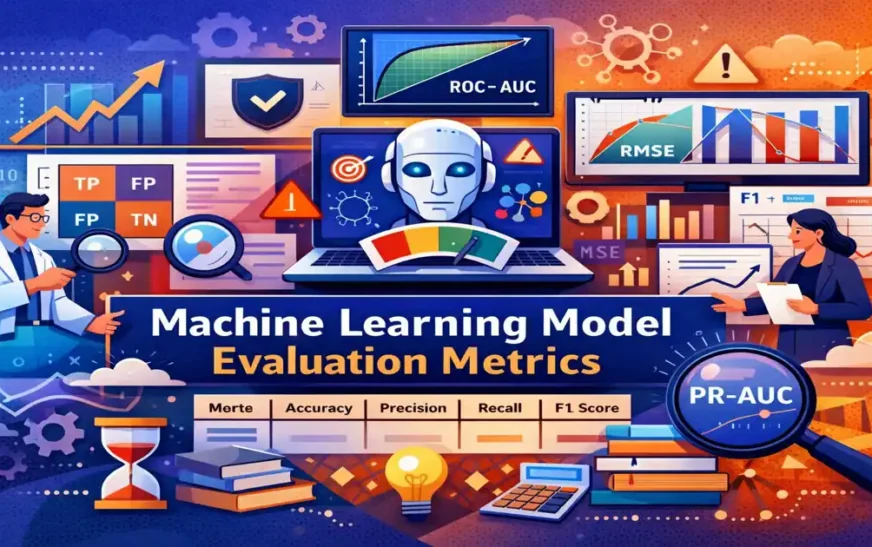

Introduction to Machine Learning Model Evaluation Metrics

Machine learning model evaluation metrics are used to answer one simple question:

“Can I trust this model to make decisions in the real world?”

Most beginners assume the answer comes from a single number—usually accuracy.

Most experienced engineers know that accuracy is often the most misleading metric in the room.

In real projects:

-

Data is messy

-

Classes are imbalanced

-

Business costs are asymmetric

-

And models behave very differently after deployment

That’s why model evaluation must be treated as a process, not a checkbox.

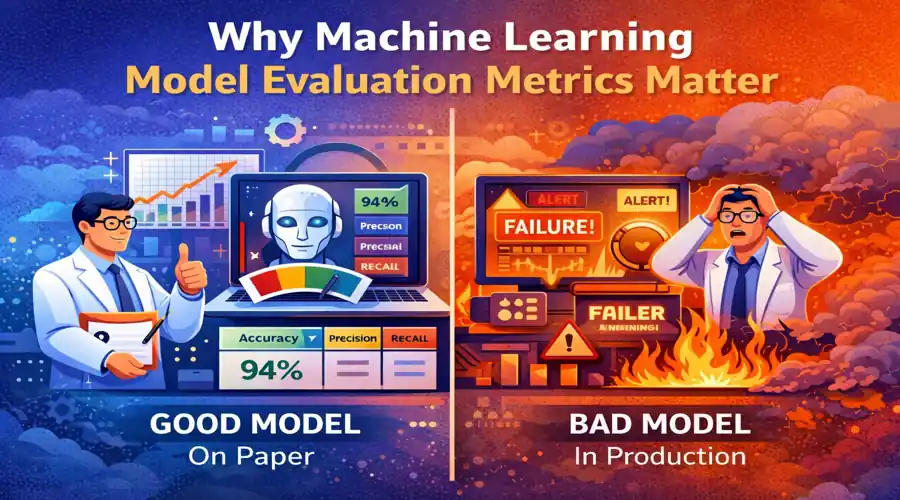

Why Machine Learning Model Evaluation Metrics Matter

A “good” model on paper can still be a bad model in production.

Accuracy vs Real-World Performance

Imagine a fraud detection system where only 1% of transactions are fraudulent.

A model that predicts “not fraud” every time gives you 99% accuracy.

Would you deploy it?

Of course not.

This is where machine learning model evaluation metrics matter—not to look good, but to reveal uncomfortable truths early.

The Cost of Wrong Predictions

In real systems:

-

A false negative might mean missing fraud or disease

-

A false positive might mean blocking a real customer or triggering false alarms

Metrics exist to expose these trade-offs, not hide them.

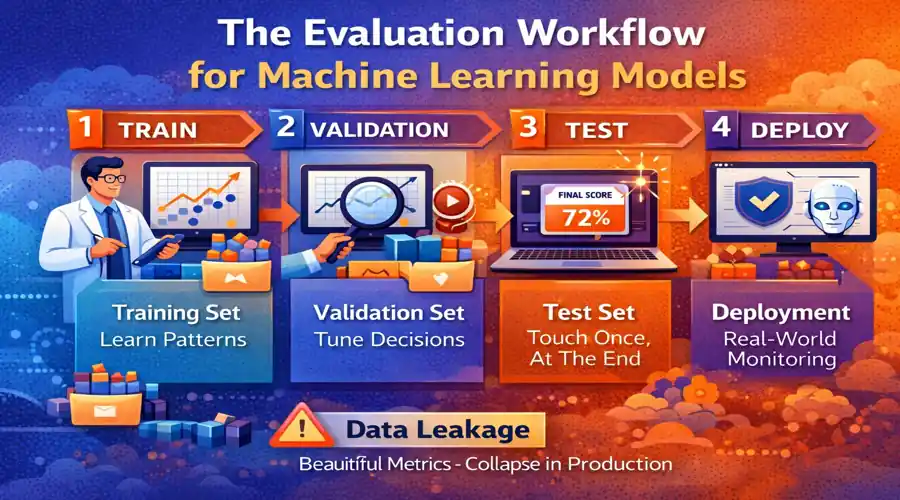

The Evaluation Workflow for Machine Learning Models

Before discussing individual metrics, it’s important to understand where evaluation fits in the workflow.

Train, Validation, and Test Sets

A common beginner mistake is evaluating a model on data it has already seen.

A correct setup looks like this:

-

Training set → used to learn patterns

-

Validation set → used to tune decisions

-

Test set → touched once, at the end

If your test score keeps improving every time you tweak the model, you’re probably leaking information.

Cross-Validation (When and Why)

Cross-validation helps when:

-

Data is limited

-

Results fluctuate heavily

-

You want stable estimates

But it’s not free. It increases compute cost and complexity.

Use it deliberately, not automatically.

Data Leakage: The Silent Killer

Data leakage doesn’t crash code.

It produces beautiful metrics that collapse in production.

Common leakage sources:

-

Scaling before splitting data

-

Using future information in time-based problems

-

Feature engineering done outside pipelines

If metrics look too good, assume leakage first.

Classification Model Evaluation Metrics Explained

Most discussions around machine learning model evaluation metrics focus on classification—and for good reason. These models often drive high-risk decisions.

Confusion Matrix: The Foundation

Everything starts with the confusion matrix.

It forces you to look at:

-

True positives

-

False positives

-

True negatives

-

False negatives

If you don’t understand this matrix, the rest of the metrics are just math.

Accuracy: When It Works (and When It Lies)

Accuracy works when:

-

Classes are balanced

-

Costs of errors are similar

-

The problem is simple

Accuracy fails when:

-

Data is imbalanced

-

Rare events matter

-

Decisions have consequences

Treat accuracy as a sanity check, not a decision-maker.

Precision, Recall, and F1-Score

These metrics exist because accuracy is insufficient.

-

Precision answers: “When the model says YES, how often is it right?”

-

Recall answers: “How many actual YES cases did the model catch?”

In real projects:

-

Precision protects you from false alarms

-

Recall protects you from missed opportunities

F1-score balances both—but hides which side you are sacrificing.

Never deploy a model based on F1 alone. Always inspect precision and recall separately.

ROC-AUC vs PR-AUC

ROC-AUC looks impressive in presentations.

PR-AUC tells the truth in imbalanced datasets.

If your positive class is rare:

-

ROC-AUC can look high even for weak models

-

PR-AUC reflects actual usefulness

Experienced engineers prefer PR-AUC for rare-event problems.

Log Loss: Probability Quality Matters

Some models output probabilities, not decisions.

Log loss evaluates:

-

How confident the model is

-

How wrong that confidence is

| Metric | What it Really Tells You | Best Used When | Common Mistake |

|---|---|---|---|

| Accuracy | Overall correctness | Classes are balanced | Misleading for imbalanced data |

| Precision | Trustworthiness of positive predictions | False alarms are costly (spam, alerts) | Ignoring missed positives |

| Recall | Ability to catch positives | Missing positives is risky (fraud, medical) | Generating too many false alarms |

| F1-Score | Balance between precision & recall | Need a single comparison number | Hides which side is weak |

| ROC-AUC | Ranking ability across thresholds | Balanced datasets | Overestimates performance on rare classes |

| PR-AUC | Performance on positive class | Highly imbalanced datasets | Harder to explain to non-technical teams |

| Log Loss | Probability confidence quality | Probabilistic outputs | Misused when only labels matter |

In pricing, ranking, and recommendation systems, log loss matters more than accuracy.

Regression Model Evaluation Metrics Explained

Regression problems feel simpler—but they hide subtle traps.

MAE, MSE, and RMSE

-

MAE tells you average error in plain units

-

MSE punishes large errors heavily

-

RMSE makes error scale interpretable again

If large mistakes are unacceptable, RMSE exposes them.

If interpretability matters, MAE is your friend.

R²: Useful but Dangerous

R² answers:

“How much variance did the model explain?”

It does not answer:

-

Is the prediction good?

-

Is the error acceptable?

-

Will this generalize?

Always pair R² with an absolute error metric.

MAPE and Business Interpretation

MAPE looks intuitive—but breaks when true values approach zero.

Use it cautiously, especially in finance and demand forecasting.

| Metric | Error Interpretation | Sensitive To | When Engineers Prefer It |

|---|---|---|---|

| MAE | Average absolute error | Outliers (low) | When interpretability matters |

| MSE | Squared error | Outliers (very high) | When large mistakes are unacceptable |

| RMSE | Error in original units | Outliers (high) | When stakeholders want intuitive scale |

| R² | Variance explained | Data distribution | Comparing models on same dataset |

| MAPE | Percentage error | Near-zero values | Business reports (with caution) |

| Huber Loss | Hybrid MAE + MSE | Tunable | Robust regression problems |

Unsupervised Learning Model Evaluation Metrics

Unsupervised models don’t have labels—which makes evaluation tricky.

Silhouette Score

Silhouette score measures how well points fit within clusters.

It helps compare models—but does not guarantee meaningful clusters.

Davies–Bouldin and Calinski–Harabasz

These metrics measure cluster compactness and separation.

They are relative indicators, not proof of correctness.

Human and Business Validation

In practice:

-

Visual inspection

-

Domain knowledge

-

Business validation

often matter more than numerical scores in unsupervised learning.

Overfitting, Underfitting, and the Bias–Variance Tradeoff

| Situation | Training Metric | Validation Metric | Typical Fix |

|---|---|---|---|

| Overfitting | Very high | Much lower | Regularization, more data |

| Underfitting | Low | Low | Better features, complex model |

| Good Fit | High | Slightly lower | Monitor in production |

| Data Leakage | Extremely high | Unrealistic | Rebuild pipeline |

Machine learning model evaluation metrics reveal how a model fails, not just how it performs.

Signs of Overfitting

-

Training score improves continuously

-

Validation score stagnates or drops

Signs of Underfitting

-

Both training and validation scores are poor

-

Model is too simple for the problem

Metrics don’t fix these issues—but they expose them early.

Choosing the Right Machine Learning Model Evaluation Metric

| Problem Type | Primary Metric | Supporting Metrics | Why |

|---|---|---|---|

| Fraud Detection | Recall / PR-AUC | Precision, cost-based metric | Missing fraud is expensive |

| Medical Screening | Recall | Precision, confusion matrix | False negatives are dangerous |

| Spam Filtering | Precision | Recall, F1 | False positives hurt UX |

| Credit Scoring | PR-AUC | KS-statistic, recall | Class imbalance is extreme |

| Sales Forecasting | MAE / RMSE | MAPE, baseline error | Interpretability matters |

| Recommendation | Log Loss | Top-K accuracy | Probability ranking is key |

There is no “best” metric—only best aligned metrics.

Metric Selection by Problem Type

-

Fraud detection → Recall, PR-AUC

-

Medical screening → Recall first, then precision

-

Spam filtering → Precision-focused

-

Forecasting → MAE / RMSE with baseline comparison

Business-Driven Metric Selection

If you know the cost of errors:

-

Translate predictions into business loss

-

Optimize for expected cost, not academic scores

This is where good engineers stand out.

Model Evaluation in Production Systems

| Dataset | Purpose | Should Influence Decisions? | Common Abuse |

|---|---|---|---|

| Training | Learn parameters | ❌ No | Reporting as final score |

| Validation | Tune model & thresholds | ⚠️ Limited | Overfitting to validation |

| Test | Final unbiased evaluation | ✅ Yes | Repeated testing |

Evaluation doesn’t stop at deployment.

Data Drift and Concept Drift

A model can degrade even if code doesn’t change.

Metrics must be monitored over time, not just once.

Retraining Triggers

Practical rule:

-

Track core evaluation metrics weekly or monthly

-

Retrain when deviation crosses a defined threshold

Common Mistakes in Machine Learning Model Evaluation

| Mistake | Why It Happens | Correct Practice |

|---|---|---|

| Using accuracy everywhere | Simplicity | Match metric to risk |

| Single-metric reporting | Convenience | Use metric sets |

| Ignoring baselines | Overconfidence | Compare against dummy models |

| Offline-only evaluation | Time pressure | Monitor in production |

| Chasing leaderboard scores | Ego | Optimize for business value |

Experienced engineers avoid these:

-

Trusting a single metric

-

Evaluating on training data

-

Ignoring class imbalance

-

Forgetting business context

-

Skipping baseline models

Metrics are guards—not guarantees.

| What to Monitor | Metric Example | Why It Matters |

|---|---|---|

| Prediction quality | Recall / MAE | Performance decay |

| Data distribution | Feature mean / PSI | Detect data drift |

| Confidence | Log loss | Overconfidence risk |

| Business outcome | Cost / revenue | Real success signal |

Frequently Asked Questions on Machine Learning Model Evaluation Metrics

What are machine learning model evaluation metrics?

Machine learning model evaluation metrics are quantitative measures used to assess how well a model performs on unseen data. They help engineers understand prediction quality, generalization ability, and real-world reliability beyond just accuracy.

Why is accuracy not a reliable metric for all machine learning models?

Accuracy can be misleading when datasets are imbalanced or when different types of errors have different costs. In such cases, metrics like precision, recall, PR-AUC, or cost-based metrics provide a more realistic picture of model performance.

Which machine learning model evaluation metric is best for imbalanced datasets?

For imbalanced datasets, metrics such as precision, recall, F1-score, and especially PR-AUC are more informative than accuracy. These metrics focus on the minority class, which usually matters most in real-world problems.

How do I choose the right evaluation metric for a machine learning problem?

The right evaluation metric depends on the problem context, error costs, and business impact. A good approach is to first identify which type of error is more expensive and then select metrics that highlight that risk clearly.

What is the difference between precision and recall in model evaluation?

Precision measures how many predicted positives are actually correct, while recall measures how many actual positives the model successfully identifies. In practice, precision reduces false alarms, and recall reduces missed opportunities.

What is ROC-AUC, and when should it be avoided?

ROC-AUC measures how well a model ranks positive and negative classes across thresholds. It should be avoided as a primary metric in highly imbalanced datasets, where PR-AUC provides a more realistic evaluation.

Which evaluation metrics are commonly used for regression models?

Common regression evaluation metrics include MAE, MSE, RMSE, and R². Engineers usually prefer MAE or RMSE for interpretability and use R² only as a supporting metric, not a decision driver.

How do evaluation metrics help detect overfitting in machine learning models?

Overfitting becomes visible when training metrics are significantly better than validation or test metrics. Monitoring this gap helps engineers identify whether a model is memorizing data instead of learning general patterns.

Do machine learning model evaluation metrics change after deployment?

Yes, evaluation metrics can degrade over time due to data drift or concept drift. That’s why production systems must continuously monitor model performance instead of relying only on offline evaluation results.

What is the biggest mistake engineers make in model evaluation?

The most common mistake is relying on a single metric, usually accuracy. Real-world model evaluation requires multiple complementary metrics and a clear understanding of business and domain constraints.

How do machine learning model evaluation metrics affect real business decisions?

Machine learning model evaluation metrics directly influence business decisions by determining how much risk a model introduces. Choosing the wrong metric can lead to financial loss, poor user experience, or compliance issues, even if the model appears accurate.

Can the same machine learning model evaluation metrics be used for all problems?

No. Machine learning model evaluation metrics must be selected based on the problem type, data distribution, and cost of errors. Metrics suitable for classification may be meaningless for regression, clustering, or forecasting tasks.

Why do machine learning model evaluation metrics change after deployment?

Machine learning model evaluation metrics often change after deployment due to data drift, user behavior changes, or evolving real-world conditions. This is why continuous monitoring is essential for production models.

How many machine learning model evaluation metrics should be tracked for one model?

In practice, engineers track a small set of complementary machine learning model evaluation metrics, usually one primary metric aligned with business goals and two or three supporting metrics for diagnostics and monitoring.

Are machine learning model evaluation metrics enough to guarantee a good model?

No. Machine learning model evaluation metrics provide quantitative insight, but they cannot replace domain knowledge, sanity checks, and real-world validation. A model can score well numerically and still fail operationally.

Conclusion: How to Think About Model Evaluation

Machine learning model evaluation metrics are not about finding the highest number.

They are about:

-

Understanding risk

-

Anticipating failure

-

Making informed trade-offs

If you remember one thing, let it be this:

A model that looks good on paper but fails in reality was not evaluated properly.

If you want to go deeper next:

-

Model Training and Validation Workflows

Those topics build directly on the evaluation mindset you’ve learned here.