Introduction to Agentic AI Systems (Why This Shift Matters)

For the past few years, most organizations have interacted with Artificial Intelligence through assistants—chat-based systems that respond to prompts, generate text, summarize documents, or answer questions. These systems created enormous productivity gains, but they also revealed a fundamental limitation: assistants react, they do not act.

As enterprises attempt to move from experimentation to real operational impact, a new paradigm is emerging. Agentic AI Systems represent a shift from reactive assistance to goal-driven, autonomous workflows that can plan, execute, observe outcomes, and adapt over time.

This transition is not about smarter models alone. It is about system design, governance, and accountability. Organizations that understand this distinction are beginning to treat AI not as a feature, but as an operational capability embedded into workflows.

Agentic AI Systems mark the point where AI stops being a conversational tool and starts becoming a participant in work execution.

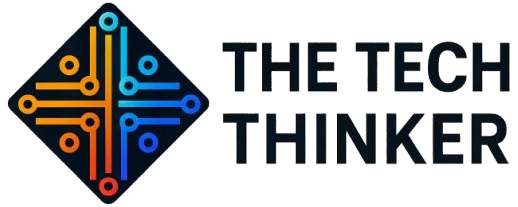

What Are Agentic AI Systems?

Agentic AI Systems are goal-driven AI systems capable of planning, executing, monitoring, and adapting actions across time using tools, memory, and decision logic, while operating within explicit constraints and oversight mechanisms.

This definition deliberately avoids buzzwords. The essence of agentic behavior lies in agency—the capacity to pursue objectives rather than simply respond to inputs.

Key characteristics of agentic systems include:

-

Persistent goals rather than single-turn prompts

-

Multi-step reasoning and task decomposition

-

Interaction with external tools and systems

-

State awareness and memory across actions

-

Evaluation of outcomes and corrective behavior

-

Embedded governance and human override

These systems are not synonymous with autonomy in the absolute sense. Instead, they enable bounded autonomy, where intelligent workflows are permitted to act independently within carefully defined limits.

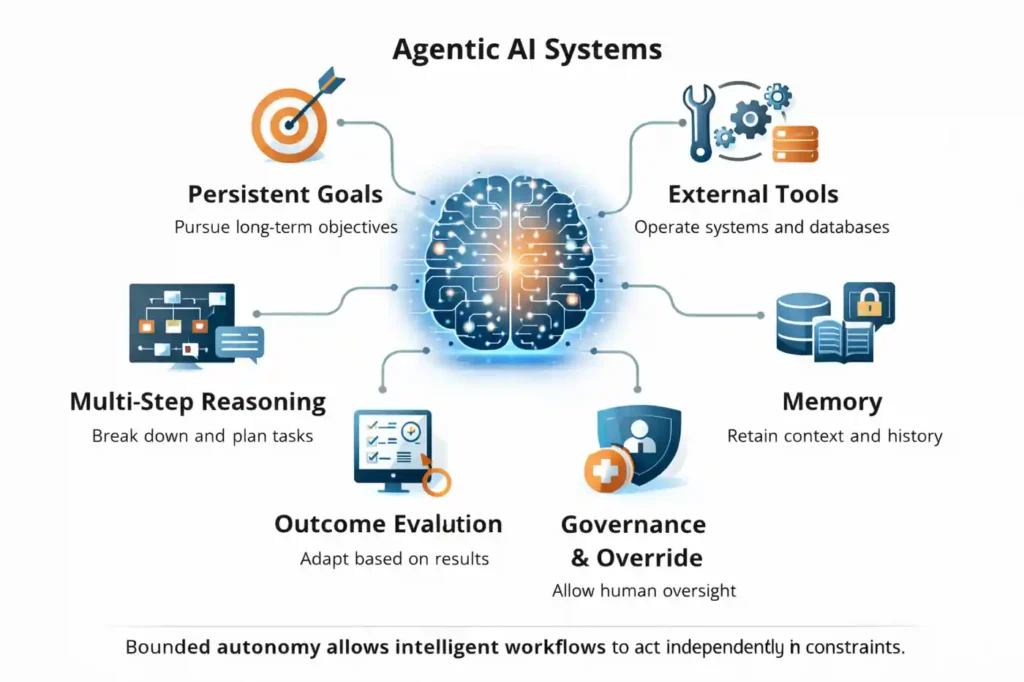

Agentic AI Systems vs Traditional AI Assistants

| Dimension | AI Assistants | Agentic Systems |

|---|---|---|

| Core Role | Support interaction | Execute workflows |

| Behavior | Reactive | Goal-oriented |

| State | Stateless | Stateful |

| Time Horizon | Single interaction | Continuous |

| Tool Use | Optional | Essential |

| Accountability | Human-owned | Shared |

| Risk Profile | Low | Medium to high |

This distinction explains why many assistant-based deployments plateau quickly. They improve efficiency at the edges but do not fundamentally change how work flows through an organization. Agentic workflows, by contrast, reshape execution paths themselves.

The Evolution from Assistants to Autonomous Workflows

The journey toward agent-based workflows mirrors earlier transitions in enterprise software. Scripts evolved into applications, applications evolved into platforms, and platforms evolved into ecosystems. AI is now following a similar path.

Early deployments focused on isolated tasks: answering questions, generating reports, or classifying data. These deployments were valuable but inherently limited. Each action required human initiation and coordination.

Modern agentic approaches remove this bottleneck by allowing AI to own sequences of actions, not just individual steps. This evolution represents a shift from task assistance to workflow ownership.

Autonomy Spectrum in Agentic AI Systems

Autonomy exists on a gradient rather than as a binary switch.

| Autonomy Level | Description | Typical Use |

|---|---|---|

| Manual | AI suggests, humans execute | Decision support |

| Assisted | AI executes with approval | Sensitive workflows |

| Conditional | AI acts within constraints | Operational automation |

| Autonomous | AI owns end-to-end outcomes | Rare, high-maturity cases |

Most enterprises deliberately avoid full autonomy. Instead, they allow agentic workflows to earn trust progressively through validation, monitoring, and controlled expansion of authority.

Core Architecture of Agentic AI Systems

One of the most damaging misconceptions is that agentic behavior emerges simply by using more advanced models. In reality, these are architected systems, not monolithic intelligence.

They consist of multiple interacting layers, each responsible for a specific aspect of agency.

Core Components of Agentic Systems

| Component | Responsibility |

|---|---|

| Goal Manager | Defines and tracks objectives |

| Planner | Decomposes goals into tasks |

| Executor | Performs actions via tools |

| Memory Layer | Maintains context and history |

| Evaluator | Assesses outcomes |

| Governor | Enforces constraints and policies |

This modularity enables control, auditability, and resilience.

Planner–Executor Pattern in Agentic AI Systems

The planner–executor pattern forms the backbone of most agent-based designs. The planner interprets objectives, generates task sequences, and evaluates dependencies. The executor carries out tasks using authorized tools and systems.

Crucially, the loop does not end at execution. Results are fed back into the planner, enabling adjustment and correction. This feedback loop distinguishes agentic workflows from static automation.

Tool Use and Action Layers in Agentic Systems

Tools transform reasoning into impact. Without tools, intelligent agents remain theoretical.

Common tool categories include:

-

APIs for enterprise systems

-

Databases and data warehouses

-

File systems and document stores

-

Code execution environments

-

Monitoring and alerting platforms

However, tool access introduces significant risk. Mature deployments enforce least-privilege access, explicit authorization, sandboxed execution, and fail-safe defaults. Unchecked tool usage is one of the fastest paths to failure.

Memory and State Management in Agentic Workflows

Memory allows agents to operate across time. Without memory, systems repeat mistakes and lose context.

| Memory Type | Purpose |

|---|---|

| Short-term | Task context |

| Episodic | Past actions and outcomes |

| Semantic | Domain knowledge |

| Task | Workflow continuity |

Memory must be governed as rigorously as data pipelines. Poor memory hygiene leads to drift, bias amplification, and compliance exposure.

Agentic Systems vs Traditional Automation Platforms

A common question is whether agentic approaches simply replace existing automation tools such as RPA or workflow engines. The answer is nuanced.

| Feature | RPA | Workflow Engines | Agentic Systems |

|---|---|---|---|

| Flexibility | Low | Medium | High |

| Decision-making | Rule-based | Conditional | Probabilistic |

| Adaptability | Minimal | Limited | High |

| Governance | Process-level | System-level | Multi-layer |

| Failure Handling | Brittle | Predictable | Dynamic |

These systems do not replace deterministic automation. Instead, they complement it by handling ambiguity, exceptions, and dynamic decision paths that rigid systems cannot manage.

Decision Ownership in Agentic Workflows

As AI gains agency, decision ownership becomes the central governance challenge.

Key questions include:

-

Who is accountable for agent actions?

-

How are decisions reviewed?

-

When is escalation required?

| Model | Description | Risk |

|---|---|---|

| Human-Owned | AI advises only | Low |

| Shared | AI executes under oversight | Medium |

| System-Owned | AI owns outcomes | High |

Most organizations adopt shared ownership models, where agents act within boundaries but humans retain ultimate responsibility.

Reliability Engineering for Autonomous Workflows

Reliability in agentic architectures is not achieved through accuracy alone. It requires engineering discipline.

Key strategies include:

-

Redundant validation checks

-

Confidence thresholds for execution

-

Graceful degradation

-

Rollback mechanisms

Such systems must be designed to fail safely, not optimally.

Observability and Metrics for Agentic Systems

You cannot govern what you cannot observe.

Effective observability includes:

-

Action logs

-

Tool invocation traces

-

Decision rationales

-

Outcome verification

| Metric | Purpose |

|---|---|

| Task success rate | Execution reliability |

| Human intervention rate | Trust maturity |

| Rollback frequency | Risk exposure |

| Tool error rate | Integration health |

Observability transforms autonomy from a gamble into a measurable capability.

Security Risks in Agentic Architectures

Agentic workflows expand the attack surface by combining reasoning with execution.

| Risk | Description |

|---|---|

| Tool misuse | Unauthorized actions |

| Privilege escalation | Excessive access |

| Feedback loops | Self-reinforcing errors |

| Data exfiltration | Indirect leakage |

Security must be embedded at the architecture level, not bolted on later.

Enterprise Use Cases of Agentic Workflows

Organizations adopt agent-based systems selectively, focusing on areas where controlled autonomy delivers measurable value.

Operations and IT

-

Incident triage

-

Automated remediation

-

Infrastructure coordination

Engineering and Manufacturing

-

Design validation

-

Compliance checking

-

Impact analysis

In these domains, agents enhance judgment rather than replace it.

Adoption Maturity Model for Agentic AI Systems

| Stage | Characteristics |

|---|---|

| Experimental | Prototypes and demos |

| Assisted | Human-approved execution |

| Integrated | Workflow ownership |

| Optimized | Continuous improvement |

| Trusted | Strategic reliance |

Most organizations underestimate the time required to move beyond experimentation.

Economic Impact of Agentic AI Systems

The value of these systems lies not in headcount reduction, but in:

-

Faster cycle times

-

Reduced error costs

-

Improved decision quality

-

Scalability of expertise

Organizations that frame adoption purely as cost-cutting often fail to realize its strategic potential.

Why Most Agentic Implementations Will Fail

Failure rarely stems from model limitations. It stems from:

-

Overconfidence in autonomy

-

Weak governance

-

Lack of ownership

-

Insufficient observability

Common anti-patterns include treating agents as products, ignoring rollback design, lacking escalation paths, and maintaining no audit trail.

The Future of Work with Agentic AI Systems

These systems will shift humans away from execution and toward supervision, judgment, and strategy. Work will increasingly involve defining objectives, managing exceptions, and validating outcomes.

Autonomy does not remove responsibility—it concentrates it.

Key Principles for Building Trustworthy Agentic AI Systems

-

Autonomy must be earned

-

Governance precedes scale

-

Validation outweighs optimization

-

Transparency builds trust

-

Human override is mandatory

These principles separate sustainable systems from fragile experiments.

Conclusion – From Assistants to Accountable Autonomy

Agentic AI Systems represent a structural shift in how intelligence is operationalized. They move AI from conversation into execution, from assistance into accountability.

Organizations that succeed will not be those chasing the most powerful models, but those designing systems that deserve trust. Agentic AI Systems are not about removing humans from the loop—they are about building loops that work reliably, safely, and at scale.

Agentic AI Systems as Socio-Technical Systems

A critical mistake in many discussions is treating these platforms as purely technical constructs. In reality, they are socio-technical systems, where human roles, organizational incentives, and governance structures interact continuously with autonomous behavior.

These systems shape—and are shaped by—how teams define responsibility, escalation, and trust. When failures occur, the root cause is often misalignment between technical autonomy and organizational readiness.

Final Leadership Takeaway

Agentic AI Systems succeed not because they are intelligent, but because they are disciplined. Discipline in architecture. Discipline in governance. Discipline in organizational alignment.

Autonomy without discipline is chaos.

Discipline without autonomy is stagnation.

These systems exist at the intersection of both.

FAQs on Agentic AI Systems

1. What are Agentic AI Systems in simple terms?

Agentic AI Systems are AI-driven systems that can pursue goals, execute multi-step tasks, and adapt actions over time, rather than simply responding to prompts.

Key traits include:

-

Goal-oriented behavior

-

Multi-step decision-making

-

Ability to act using tools and workflows

2. How are Agentic AI Systems different from AI assistants?

AI assistants mainly respond to user input, while Agentic AI Systems can plan and execute workflows independently within defined boundaries.

Core differences:

-

Reactive vs goal-driven behavior

-

Stateless vs stateful operation

-

Human-led vs system-led execution

3. Are Agentic AI Systems fully autonomous?

Most real-world deployments are not fully autonomous. They operate under bounded or supervised autonomy.

Typical controls include:

-

Approval checkpoints

-

Human override mechanisms

-

Policy and risk constraints

4. Why are enterprises adopting agentic approaches now?

Enterprises need AI that can handle complex workflows, exceptions, and cross-system coordination—not just isolated tasks.

Drivers include:

-

Operational scale

-

Workflow complexity

-

Need for faster decision cycles

5. Do Agentic AI Systems replace human workers?

No. These systems change how humans work, not whether they work.

Human roles shift toward:

-

Supervision and validation

-

Exception handling

-

Strategic decision-making

6. What are the biggest risks of Agentic AI Systems?

The main risks come from uncontrolled autonomy, not from intelligence itself.

Common risk areas:

-

Tool misuse

-

Weak governance

-

Poor observability

-

Unclear accountability

7. How is governance handled in agentic AI deployments?

Governance is built into the system architecture rather than added later.

Common governance mechanisms:

-

Policy enforcement layers

-

Human-in-the-loop controls

-

Audit logs and decision tracing

8. Which industries benefit most from agentic workflows?

Industries with complex, exception-heavy processes gain the most value.

Examples include:

-

IT operations and infrastructure

-

Engineering and manufacturing

-

Finance, logistics, and compliance-driven domains

9. How do Agentic AI Systems use tools safely?

Safe tool usage is achieved through strict access control and monitoring.

Best practices include:

-

Least-privilege permissions

-

Explicit tool authorization

-

Sandboxed execution environments

10. What role does memory play in agentic systems?

Memory allows agentic systems to retain context, learn from outcomes, and maintain workflow continuity.

Memory must be:

-

Purpose-specific

-

Governed and auditable

-

Regularly validated to avoid drift

11. How do organizations measure trust in agentic AI?

Trust is measured indirectly through operational metrics, not opinions.

Common trust indicators:

-

Reduced human intervention

-

Stable execution outcomes

-

Low rollback and error rates

12. Can Agentic AI Systems be used in regulated industries?

Yes, but only with strong governance and explainability.

Regulated deployments require:

-

Transparent decision logs

-

Clear accountability models

-

Defined limits on autonomy

13. How do agentic systems differ from RPA or workflow engines?

Traditional automation follows predefined rules, while agentic systems adapt decisions dynamically.

Key differences:

-

Rule-based vs goal-driven logic

-

Static flows vs adaptive execution

-

Limited vs contextual decision-making

14. What is shadow mode in Agentic AI Systems?

Shadow mode allows systems to observe and recommend actions without executing them.

Benefits include:

-

Risk-free evaluation

-

Governance testing

-

Error pattern discovery

15. Are Agentic AI Systems a long-term architectural shift?

Yes. They represent a foundational shift toward workflow-oriented AI.

Long-term impact includes:

-

AI embedded into operations

-

Hybrid human–AI execution models

-

New standards for governance and accountability