1. Introduction: Why the World Is Moving to the Edge

Imagine this.

A patient in an ICU is connected to a monitor tracking heart rate, oxygen saturation, respiratory pattern, and dozens of micro-signals. Traditionally, this data would travel to a cloud server to analyze patterns. But in those few hundred milliseconds of travel time, a critical event could be missed.

Now imagine the same device running AI directly inside the monitor.

The moment a dangerous irregularity appears, the system reacts. No internet. No delay. No cloud bill.

Just instant intelligence, exactly where it’s needed.

That’s the promise of Edge AI—moving intelligence from distant data centers to the devices around us.

This shift isn’t theoretical. In 2025, Edge AI is everywhere:

-

In the cameras watching over cities

-

In the cars navigating without human hands

-

In robots moving goods inside warehouses

-

In machines diagnosing crops in remote farms

-

In the smartphones and wearables we use every day

Cloud AI changed technology.

Edge AI is redefining reality.

This guide takes you through the nine most powerful trends shifting the world toward real-time intelligence.

2. What Exactly Is Edge AI?

Edge AI means running AI models locally on devices, instead of relying entirely on cloud servers.

That means:

-

Data is processed closer to where it’s generated

-

Decisions happen in milliseconds

-

Devices can work offline or with poor connectivity

-

Sensitive information never leaves the device

Examples of edge devices include:

-

CCTV cameras

-

IoT sensors

-

Smartwatches

-

Industrial robots

-

Autonomous drones

-

Point-of-sale systems

Instead of sending a video stream to the cloud for object detection, the camera itself can understand:

-

Who entered

-

What object appeared

-

Whether a threat exists

-

Which action to take next

| Feature | Edge AI | Cloud AI |

|---|---|---|

| Where data is processed | On-device | Remote servers |

| Latency | <10 ms | 100–300 ms |

| Internet needed | No | Yes |

| Privacy | High | Moderate |

| Cost | Lower long-term | High recurring |

| Best for | Real-time intelligence | Large-scale training |

This “local intelligence” opens up a world of possibilities—but it also requires specialized hardware, optimized models, and clever engineering.

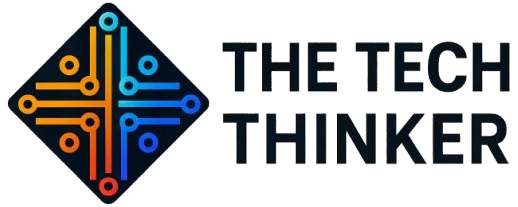

3. How Edge AI Works

Even though Edge AI sounds complex, the workflow is actually easy to understand.

Step 1: Sensor Captures Data

A camera captures video.

A microphone captures sound.

A sensor captures motion or temperature.

Step 2: On-Device Model Processes the Data

A lightweight AI model—optimized using quantization or pruning—runs on a chip like:

-

Google Coral Edge TPU

-

NVIDIA Jetson

-

Apple Neural Engine

-

Qualcomm AI Engine

Step 3: Device Generates Real-Time Output

The model classifies, predicts, or detects events instantly:

-

“Person detected.”

-

“Faulty machine vibration spotted.”

-

“Customer picked an item from shelf.”

Step 4: Action Happens Immediately

The device triggers an alarm, action, log entry, or robot movement.

| Step | What Happens | Example |

|---|---|---|

| 1. Sense | Device captures data | Camera video frame |

| 2. Process | AI model runs locally | Object detection |

| 3. Decide | AI predicts result | “Person detected” |

| 4. Act | Device triggers output | Alarm or automation |

| 5. Sync (optional) | Cloud stores or updates models | Fleet analytics |

Optional Step 5: Cloud Sync

The cloud may still be used for:

-

Long-term storage

-

Model updates

-

Fleet analytics

-

Large-scale learning

But the intelligence remains on the edge.

4. Why Edge AI Became a 2025 Necessity

Four big shifts pushed Edge AI to the front line:

-

Too much data (streaming constantly from cameras, IoT sensors, and machines).

-

Cloud bandwidth is expensive, especially for video analytics.

-

Businesses need real-time results, not milliseconds of delay.

-

Regulations demand stricter data privacy—local processing solves it.

| Reason | Description |

|---|---|

| Explosion of data | Too much sensor & video data for cloud to handle |

| Expensive cloud analytics | Real-time video inference is costly |

| Need for real-time decisions | Business operations need instant insights |

| Stricter privacy rules | Local processing meets compliance |

In short:

We created more data than the cloud can handle efficiently.

Edge AI closes that gap.

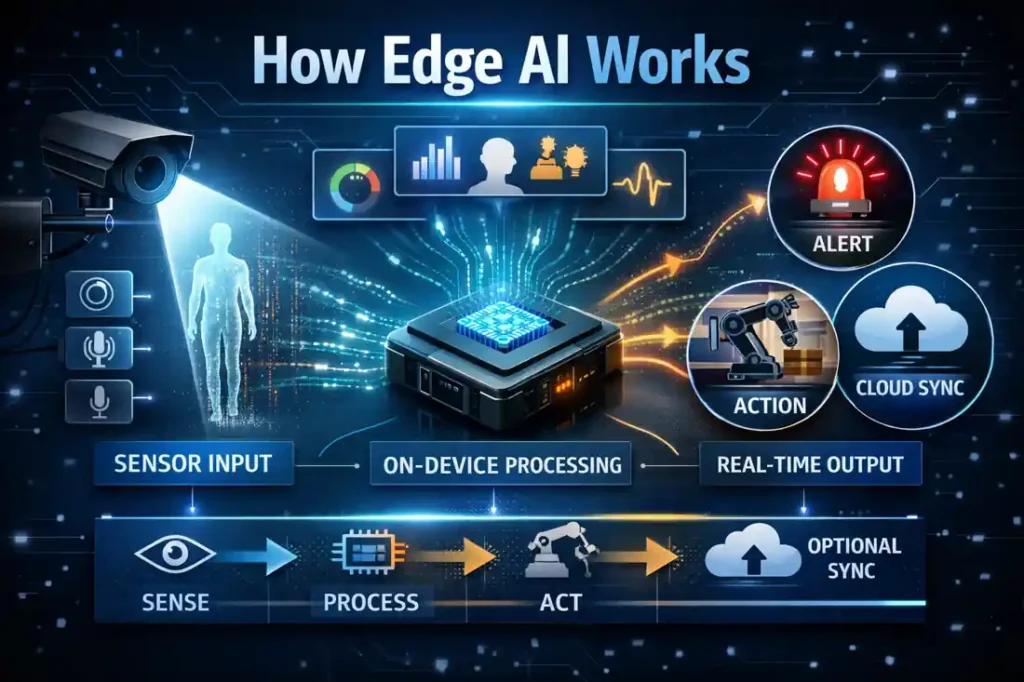

5. Trend #1: Generative AI Moves to the Edge

The biggest surprise of 2025?

Generative AI is shrinking.

The world saw “small LLMs” hitting:

-

Smartphones

-

Laptops

-

IoT hubs

-

Edge servers

-

Vehicles

These tiny generative models enable:

-

On-device summarization

-

On-device captioning & transcription

-

Instant chatbot responses without cloud

-

Personalized AI that doesn’t leak data

-

Offline copilots for workers, technicians, and students

Why this matters:

-

No internet dependency

-

Zero data exposure

-

Ultra-low latency

-

Massive cost savings

In 2025, companies started shipping phones with mini LLMs embedded at the silicon level.

Edge AI + GenAI is becoming the new standard.

| Capability | What It Means | Benefit |

|---|---|---|

| On-device summary | Text summarization offline | Privacy + speed |

| On-device captioning | Describe images locally | Helpful for vision devices |

| Offline chatbot | Real-time replies | No cloud cost |

| Personalized LLMs | Local user preference learning | Highly private |

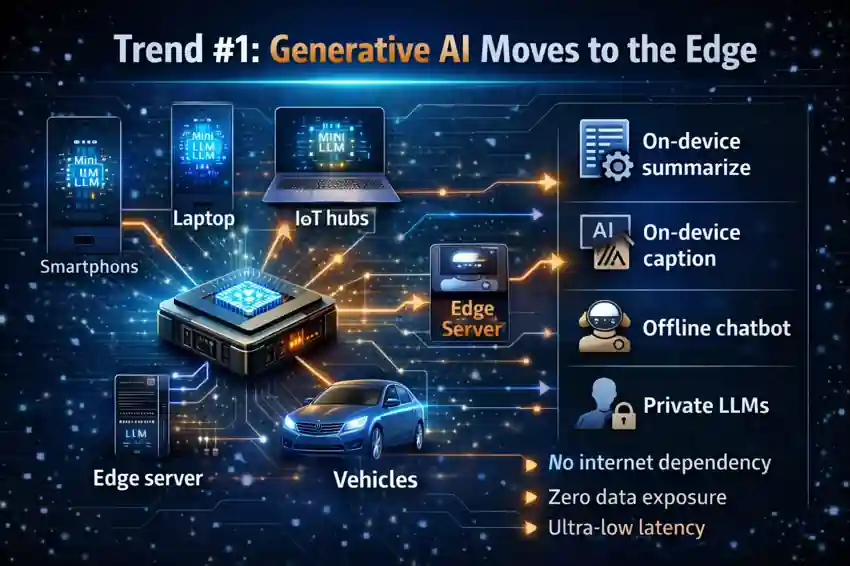

6. Trend #2: Ultra-Low Latency Becomes Standard

Latency is the new productivity.

Cloud AI typically runs with round-trip delays of 100–300 ms.

Edge AI brings this down to:

<10 milliseconds.

This changes everything:

-

Machines detect problems faster

-

Robots coordinate movements in real-time

-

Cars react instantly to obstacles

-

Retail systems track items as they move

-

Cameras identify threats without lag

As industries automate, delay becomes unacceptable.

Edge AI solves that with precision.

| System | Typical Latency | Use Case Suitability |

|---|---|---|

| Cloud AI | 100–300 ms | Non-urgent processing |

| Hybrid AI | 30–80 ms | Semi-real-time |

| Edge AI | <10 ms | Safety-critical tasks |

7. Trend #3: Edge AI Cameras and Vision Systems Explode

The fastest-growing segment in Edge AI?

Computer vision at the edge.

Retail stores, warehouses, hospitals, airports, and factories deploy cameras that:

-

Recognize objects

-

Count people

-

Detect intruders

-

Track movement

-

Identify defective products

-

Monitor safety compliance

Instead of sending video streams to the cloud (expensive + slow), cameras run AI models inside the device.

Benefits:

-

Reduced bandwidth

-

Faster insights

-

Private local processing

-

No video sent externally

Edge vision systems are now powering:

-

Autonomous checkout stores

-

Smart city surveillance

-

Industrial quality inspection

-

Traffic management

-

Airport security

This trend alone is creating multi-billion-dollar industries.

| Industry | Edge Vision Task | Why It Matters |

|---|---|---|

| Retail | Smart checkout | Fast, cashierless shopping |

| Manufacturing | Defect detection | Higher quality output |

| Smart cities | Traffic analytics | Reduced congestion |

| Airports | Security scanning | Faster clearance |

| Healthcare | Patient monitoring | Immediate alerts |

8. Trend #4: AI Accelerators Get Cheaper and More Capable

AI chips at the edge used to cost thousands of dollars.

Today, you can get:

-

Google Coral TPU ($59)

-

NVIDIA Jetson Nano ($149)

-

Qualcomm AI accelerators built into mainstream phones

-

Apple Neural Engine in every device

These chips are designed for:

-

8-bit quantized models

-

Edge-optimized CNNs

-

Low-power inference

-

High-throughput real-time processing

This affordability unlocked:

-

Smart cameras

-

Home robots

-

Voice assistants

-

Wearables

-

Smart appliances

-

Industrial sensors

Edge AI is not only powerful, it’s now cost-effective.

| Hardware | Strength | Typical Use |

|---|---|---|

| Google Coral TPU | Ultra-fast quantized inference | Vision devices |

| Jetson Nano | GPU-based | Robotics |

| Qualcomm AI Engine | Mobile AI | Smartphones |

| Apple Neural Engine | ML acceleration | iPhones & iPads |

| TinyML Boards | Ultra-low power | Wearables & sensors |

9. Trend #5: On-Device Privacy Becomes a Global Priority

Data privacy laws tightened dramatically between 2023 and 2025.

Governments now require:

-

Minimal data transfer

-

Strict retention policies

-

Clear audit trails

-

Reduced cloud dependency

Edge AI became the natural solution because:

-

Data doesn’t leave the device

-

Sensitive info stays private

-

Processing happens locally

-

Only anonymized outcomes are shared

Hospitals, banks, and government agencies are moving to Edge AI first because privacy = compliance.

10. Trend #6: Edge AI + 5G/6G = Real-Time Everything

5G brought low latency.

6G promises sub-millisecond latency.

Edge AI + 5G/6G is a perfect combo:

-

Faster coordination of distributed devices

-

Real-time HD video processing

-

Autonomous vehicles talking to each other

-

Factory machines communicating instantly

-

Drones forming networks mid-air

5G/6G doesn’t replace edge computing—it amplifies it.

11. Trend #7: Industry 4.0 Brings Autonomous Factories

Factories now use edge intelligence to run themselves:

-

Robots adjust speed dynamically

-

Conveyor belts self-optimize

-

Machines predict failures

-

Cameras inspect quality in real time

-

Sensors ensure safety compliance

Cloud cannot support this scale.

Edge computing makes factories:

-

Faster

-

More reliable

-

More efficient

-

Less costly

Automation depends on edge intelligence.

And in 2025, every major manufacturing company is adopting it.

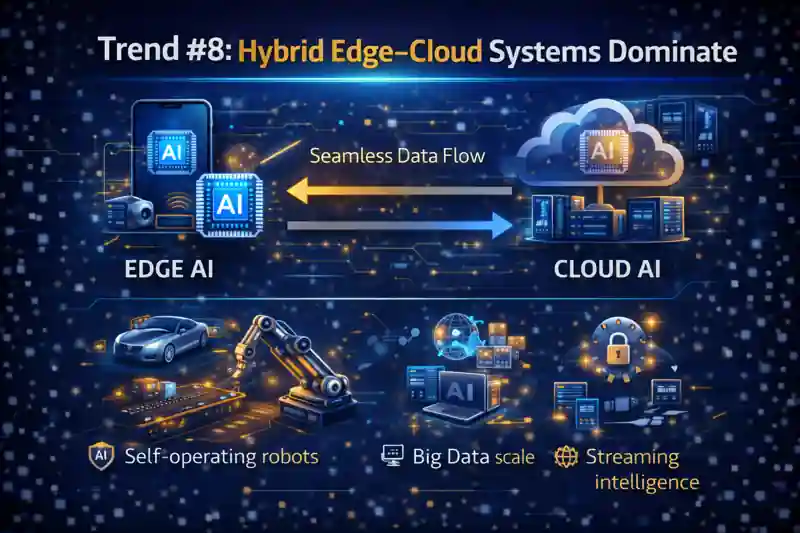

12. Trend #8: Hybrid Edge–Cloud Systems Dominate

Companies discovered the best approach is not cloud OR edge, but both.

The edge handles:

-

Real-time inference

-

Device-level actions

-

Privacy-sensitive data

The cloud handles:

-

Long-term storage

-

Training and retraining

-

Analytics

-

Monitoring dashboards

The future is symmetric intelligence—models that fluidly shift between cloud and edge depending on context.

| Task | Edge | Cloud |

|---|---|---|

| Real-time inference | ✔ | ❌ |

| Physical-world actions | ✔ | ❌ |

| Privacy-critical data | ✔ | ❌ |

| Long-term storage | ❌ | ✔ |

| Large model training | ❌ | ✔ |

13. Trend #9: TinyML Makes Small Devices Brilliant

TinyML is the art of running AI on microcontrollers (MCUs).

We’re talking chips that cost less than $5 and run on coin batteries.

These tiny devices now perform:

-

Keyword spotting

-

Basic vision tasks

-

Gesture recognition

-

Environmental monitoring

-

Predictive maintenance

And they do it with:

-

Ultra-low power consumption

-

Zero connectivity requirements

-

Minimal memory footprint

TinyML is turning:

-

Lightbulbs

-

Thermostats

-

Wearables

-

Sensors

-

Door locks

…into intelligent devices.

Edge AI is no longer limited to big chips—

even the smallest devices are getting smarter.

| Feature | Edge AI | TinyML |

|---|---|---|

| Hardware power | Moderate | Very low |

| Model size | Medium | Very small |

| Power usage | Low–medium | Ultra-low |

| Use cases | Cameras, robots | Sensors, wearables |

| Connectivity | Optional | Often offline |

14. Challenges Slowing Down Edge AI

| Challenge | Why It Matters |

|---|---|

| Limited compute | Hard to run large models locally |

| Battery limits | Edge devices must be power efficient |

| Model optimization | Needs expertise (quantization, pruning) |

| Fleet management | Updating many devices is difficult |

| Security | Edge devices are physically exposed |

Even though Edge AI is booming, it faces important challenges:

1. Limited compute

Edge devices cannot run massive LLMs natively.

2. Power constraints

Battery-powered devices require efficient models.

3. Model optimization complexity

Quantization, pruning, and compression need expertise.

4. Distributed fleet management

Updating thousands of edge nodes is not trivial.

5. Security risks

Physical device tampering is a real concern.

Edge AI’s growth depends on solving these problems gracefully.

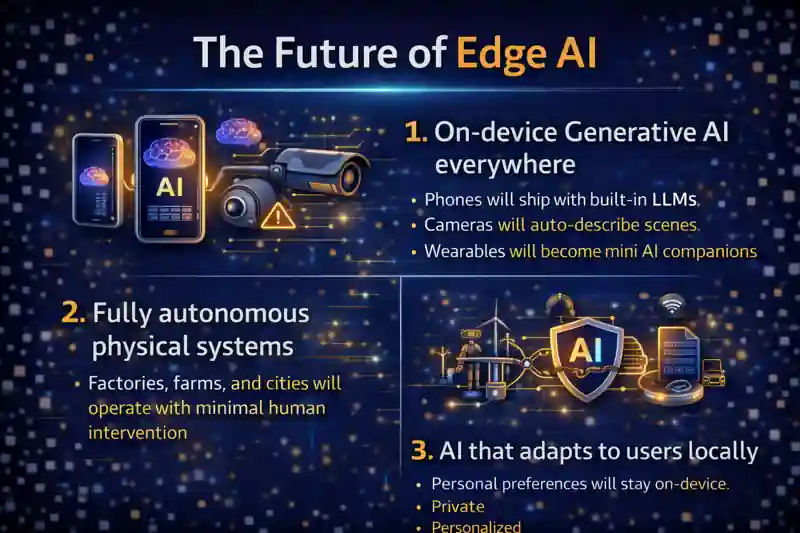

15. The Future of Edge AI

Three major transformations are coming:

1. On-device Generative AI everywhere

Phones will ship with built-in LLMs.

Cameras will auto-describe scenes.

Wearables will become mini AI companions.

2. Fully autonomous physical systems

Factories, farms, and cities will operate with minimal human intervention.

3. AI that adapts to users locally

Personal preferences will stay on-device.

AI becomes:

-

Private

-

Personalized

-

Predictive

This is the most powerful trend of all.

16. Final Thoughts

The world is entering a new era of intelligence—

not stored in massive data centers,

but woven into the everyday devices around us.

Edge AI is not a trend.

It’s the foundation of real-time intelligence.

From retail to robotics, healthcare to manufacturing, transportation to smart homes, Edge AI is reshaping how technology sees, understands, and reacts to the world.

Businesses adopting Edge AI today will lead tomorrow’s innovations.

Because the future of intelligence is not far away.

It’s right beside us—running on the edge.

| Future Trend | Expected Outcome |

|---|---|

| On-device LLMs | Truly personal AI |

| 6G adoption | Near-zero latency intelligence |

| Autonomous factories | Human-free operations |

| Fully private AI | User data never leaves devices |

Related Posts

- machine learning model evaluation metrics

- System Design for Beginners

- Pre-Trained Models in Machine Learning

- What is Blockchain Technology

- Fundamentals of Machine Learning

External Reference

- https://www.gartner.com/en/information-technology/glossary/edge-computing

- https://www.nvidia.com/en-us/autonomous-machines/embedded-systems/

- https://developers.google.com/coral

FAQ on Edge AI

1. What is Edge AI?

It is artificial intelligence that processes data directly on devices instead of in the cloud.

2. How does Edge AI work?

It works by running optimized machine learning models locally on hardware such as cameras, sensors, or mobile processors.

3. Why is Edge AI important in 2025?

It is important because it enables real-time decisions, reduces cloud dependency, and improves privacy.

4. What are the benefits of Edge AI?

The benefits of this include ultra-low latency, stronger privacy, lower bandwidth use, offline operation, and reduced cloud costs.

5. How is Edge AI different from Cloud AI?

It processes data on-device, while Cloud AI sends data to remote servers for analysis.

6. Where is Edge AI used in real life?

It is used in autonomous vehicles, smart cameras, healthcare monitors, retail checkout systems, robots, drones, and IoT devices.

7. What hardware is required for Edge AI?

It uses hardware like NVIDIA Jetson, Google Coral TPU, Qualcomm AI Engine, Apple Neural Engine, and TinyML microcontrollers.

8. What is TinyML in Edge AI?

TinyML is the use of ultra-small machine learning models on low-power microcontrollers inside sensors and wearable devices.

9. How does Edge AI improve data privacy?

It improves privacy by processing sensitive information locally so it never leaves the device.

10. Does Edge AI support generative AI models?

Yes, modern edge processors can run small generative AI models for offline chatbot responses, summarization, and captioning.

11. How does Edge AI reduce latency?

It reduces latency by eliminating the need to send data to the cloud, enabling responses in under 10 milliseconds.

12. Which industries benefit most from Edge AI?

Industries such as automotive, healthcare, retail, manufacturing, agriculture, and smart cities see the biggest benefits from Edge AI.

13. What challenges does Edge AI face?

It faces challenges like limited compute power, battery constraints, complex model optimization, device security risks, and fleet management issues.

14. How do companies deploy Edge AI systems?

Companies deploy Edge AI using lightweight models, edge accelerators, device-side processing, and hybrid edge–cloud architectures.

15. What is the future of Edge AI?

The future of Edge AI includes on-device generative AI, autonomous systems, 6G-enabled intelligence, and highly personalized private AI.