Introduction: The Generative AI Revolution in Dev (2025)

Generative AI in Software Development has moved from a novelty to a necessity. In late 2023, early adopters experimented with tools like GitHub Copilot (built on Codex), which could fill in small code snippets. By early 2024, teams integrated AI into continuous integration pipelines for automated test generation and security scanning. Fast-forward to 2025: GPT-4o, GitHub Copilot X, and Anthropic Claude 3 collaborate with developers to generate entire microservices, refactor legacy monoliths, and maintain documentation in real time.

Over the past year, these advancements accelerated rapidly:

GPT-4o (Jan 2025): Its 64K-token context window allows the model to understand entire repositories, enabling large-scale refactoring.

Copilot X: Now built on GPT-4o, it suggests multi-line code, proposes pull requests with security fixes, and integrates SAST to flag vulnerabilities before merge.

Claude 3 (Mid 2025): Focused on enterprise security, Claude 3 auto-redacts sensitive data, performs semantic refactoring, and generates high-coverage tests.

By March 2025, surveys show 72% of North American developers use AI daily (Stack Overflow), and 65% of Fortune 500 companies now embed AI in their dev workflows (Gartner). Funding for AI dev tools hit $2.8 billion in H1 2025—up 120% year-over-year (Crunchbase). Clearly, “Generative AI in Software Development” is not a buzzphrase—it’s the baseline expectation for staying competitive.

Impact on Software Development Workflows

How AI Is Reshaping Developer Roles

In 2025, developers are less “code typists” and more AI curators. Instead of manually writing boilerplate functions, teams craft precise prompts for AI. For example:

Prompt:

“Generate a Node.js Express controller for user registration with email verification, JWT authentication, and error handling.”

Within seconds, Copilot X (running GPT-4o) returns a complete controller, unit tests, and sample API docs. Developers then review, tweak edge-case handling, and merge, saving hours.

This shift gives rise to a new role: AI Prompt Engineer, who designs prompts that capture business logic, security constraints, and performance targets. Meanwhile, front-end, back-end, QA, and DevOps roles blend as AI fills gaps—one full-stack engineer, with AI help, can handle front-end React components, back-end APIs, and automated tests.

Speed, Accuracy & Collaboration Improvements

Faster Prototyping: Teams that once spent weeks building minimum viable products now scaffold an MVP in days. Asking Copilot X “create a React signup form with Formik and Yup validation” yields pixel-perfect code in minutes.

Fewer Bugs: AI-driven static analysis tools (e.g., CodexLint, used with GPT-4o) catch security issues—SQL injection, XSS—before code reaches CI. In practice, this cuts critical bug rates by up to 50%.

Seamless Collaboration: AI bots embedded in Slack or Teams triage issues automatically. For instance, an error logged to Sentry triggers a GPT-4o service that analyzes the stack trace and opens a Jira ticket:

Title: NullPointerException in

UserService.java

Description: Occurs whenuseris null. Suggest addingif (user != null)before accessinguser.getId().

This reduces manual triage by 60% and keeps developers focused on higher-level problem solving.

Shifts in Team Structures & Skill Requirements

In 2025, a typical development team might consist of:

AI Curators: Oversee AI-generated code, focus on architecture and security.

Prompt Engineers: Craft and refine prompts to guide AI.

Domain Experts: Ensure AI outputs align with business logic and compliance (healthcare, finance).

Soft skills—communication, system design, prompt engineering—outweigh rote syntax memorization. Developers must continuously learn new AI features: GPT-4o v1.1, Copilot X security scanner updates, Claude 3’s on-premise deployment options. This ever-evolving landscape demands a culture of constant upskilling.

Why Developers & Organizations Must Adapt Gen AI

Competitive Advantage for Early AI Adopters

By embracing generative AI in 2024–2025, companies achieved:

20–30% Faster Feature Delivery: Early adopters reported launching features 20–30% faster. By 2025, that gap widened to teams shipping with 40% fewer production bugs.

Higher Code Quality: Automated testing and security scanning cut critical bugs in half.

Talent Magnet: Developers now expect AI-enabled workflows—teams without AI face up to 35% higher turnover (Stack Overflow).

Failing to adapt risks being outpaced. In 2025, if competitors ship new features twice as fast with half the bugs, your market share will erode rapidly.

Risks of Ignoring AI

Talent Flight: Manual workflows drive developers away; AI-enabled teams lure top talent.

Delayed Releases: A two-week sprint without AI can stretch to four weeks, giving competitors a head start.

Rising Costs: Manual testing, code reviews, and bug fixes inflate budgets by up to 25% compared to AI-driven teams.

Forecasted ROI for AI-Driven Development

Cost Savings: Automating boilerplate code and tests can reduce developer costs by 15–25% yearly.

Revenue Uplift: Faster, higher-quality releases translate to a 5–10% revenue increase in year one.

3× ROI by 2027: According to Gartner, organizations will see a 3× return on AI investments by 2027, as AI reduces maintenance overhead and accelerates time to market.

In short, adopting generative AI is no longer optional—it’s essential for survival in 2025.

Key Generative AI Tools & Platforms

GitHub Copilot X

Core Features & Capabilities

Copilot X, built on GPT-4o, offers:

Multi-Line, Context-Aware Suggestions: It reads entire files and commit history to propose coherent code snippets.

Automated Pull Requests: Identifies security vulnerabilities and generates PRs with suggested fixes and explanations.

Integrated SAST: Flags issues like SQL injection and XSS as you type.

Natural Language to Code: Prompt “Generate a Python script to scrape an API, store results in PostgreSQL, and handle retries” returns runnable code with error handling.

IDE Integrations (VS Code, IntelliJ, Neovim)

VS Code:

Install “GitHub Copilot X” from Extensions.

Sign in with GitHub; enable inline completions.

Toggle “Suggest Inline Only on Demand” for manual control.

IntelliJ IDEA:

Go to Plugins → Marketplace → install “GitHub Copilot X.”

Restart IDE and authenticate.

Use Copilot X for Java, Kotlin, Scala—suggestions adapt to your project’s style.

Neovim:

Add

Plug 'github-user/copilot.vim'

Set

GITHUB_TOKENin your environment.Trigger completions with

<Tab>or a custom keymap.

Pricing & Licensing

Free Tier (50 completions/day): Ideal for individual experimentation.

Pro ($10/user/mo): Unlimited completions, advanced security features, early model access.

Team ($21/user/mo): Centralized billing, usage analytics, GitHub Enterprise integration.

Enterprise ($39/user/mo): SAML SSO, custom fine-tuning on private repos, dedicated support, compliance auditing.

OpenAI’s GPT-4o Code APIs

GPT-4o’s Coding Capabilities

GPT-4o stands out with:

Multi-Language Mastery: Excels at Python, JavaScript, Java, Go, C#, Rust—writes idiomatic code and debug hints.

Large Context Window (64K Tokens): Processes entire microservices or monoliths, enabling large-scale refactors.

Fine-Tuning: Train on private repos so AI follows internal style guides and libraries.

Advanced Analysis: Identifies performance bottlenecks and suggests architectural changes (e.g., “Split this monolith into two microservices”), and generates documentation.

Integrating GPT-4o into Custom Tooling

Secure API Key: Store in HashiCorp Vault, AWS Secrets Manager, or Azure Key Vault—never hard-code it.

SDK Setup (Node.js Example):

npm install openai

import OpenAI from "openai";

const openai = new OpenAI({ apiKey: process.env.OPENAI_API_KEY });

Prompt Structure:

Instruction: “Generate an Express.js middleware to validate JWT tokens and refresh expired tokens.”

Context: Provide relevant snippets (e.g., the

auth.jsfile).

CI/CD Integration: Create a GitHub Action that sends diffs to GPT-4o, which returns unit tests or refactoring suggestions.

Anthropic Claude 3 for Enterprise Dev

Testing & Refactoring Strengths

Claude 3 excels in enterprise settings:

Automated Test Generation: Scans large Java modules and produces JUnit tests with 95%+ coverage.

Semantic Refactoring: Splits 1,000-line methods into smaller, maintainable units without breaking existing tests.

Large-Context Analysis: Up to 16K tokens, ideal for legacy codebases where small changes have cascading effects.

Security & Compliance Advantages

In-Region Deployments: Choose AWS EU-West, Azure India, or GCP US-East so data never leaves required jurisdictions.

Automatic Redaction: Strips API keys, PII, and proprietary data from prompts before sending, ensuring GDPR/HIPAA compliance.

Audit Logging: Logs every AI interaction—prompt, response, timestamp, user—making regulatory audits straightforward.

Emerging Open-Source Alternatives

LlamaCode: Features & Roadmap

Highlights:

Trained on public repos; excels at Python and JavaScript.

Lightweight—runs locally on 8 GB RAM with ≈200 ms latency.

Plugins for Neovim, Emacs, and VS Code.

Community:

3,500 stars on GitHub, active Discord with 5,000+ members.

Weekly benchmarks show LlamaCode trails Copilot X by ~15% in accuracy but is free and fully local.

Roadmap:

Q3 2025: Add Rust and Go support.

Q1 2026: Introduce local caching for faster suggestions.

StarCoder: Benchmarks & Performance

Model Sizes:

7B: 7 billion parameters, runs on mid-range GPUs.

15B: 15 billion parameters, needs ≥16 GB VRAM.

Performance:

StarCoder-7B achieves ~75% pass@1 on Python CodeContest benchmarks.

Latency ~200 ms per prompt on RTX 3060.

Use Cases:

Small teams needing an open-source model with decent accuracy.

Privacy‐critical projects where code must stay local.

How Generative AI Fits into Modern Dev Workflows

IDE Integration & Pair Programming

Installing AI Extensions

VS Code (Copilot X): Extensions → search “GitHub Copilot X” → install → sign in → enable inline completions.

IntelliJ IDEA (Claude 3): Plugins → search “Anthropic Claude” → install → restart → paste API key in Settings → configure scope.

Neovim (LlamaCode): Add

Plug 'github-user/llamacode.nvim'ininit.vim, set model and API key, then trigger:LlamaSuggestor map to<Tab>.

Accepting, Editing & Rejecting Suggestions

Always review AI output for security, performance, and logical correctness.

Use clear comments as context: “Implement user login with bcrypt and JWT.”

If output lacks requirements (e.g., missing error handling), refine prompt: “Add try/catch blocks to handle database connection failures.”

Reject and retry rather than rubber-stamp poor suggestions.

Avoiding Over-Reliance (Checklists)

Security Gate: AI code must pass a static security scan (SonarQube, Snyk).

Test Gate: Write at least one unit test for every AI-generated function.

Style Gate: Ensure code meets style guidelines (Prettier, ESLint for JS; Black, flake8 for Python).

Human Review Gate: No AI-generated code can merge without a human reviewer’s sign-off.

Automated Code Reviews & Testing

AI-Driven Static Analysis (CodexLint): Detects code smells, anti-patterns, and performance bottlenecks—unused variables, high complexity, inefficient loops. Install via npm/pip and configure rules in

.codexlintrc.Security Vulnerability Detection (SecureAI): Integrates with Azure DevOps to execute AI-driven pentesting on PRs, catching SQLi, XSS, CSRF. Add a YAML step:

- name: Run SecureAI Scan

uses: secureai/scan-action@v1

with:

api-key: ${{ secrets.SECURE_AI_KEY }}

Automated Test GenerationJest (JavaScript): On pull requests, a GitHub Action runs

generate_tests.js—it sends diffs to GPT-4o, which returns Jest tests saved to/tests.PyTest (Python): In GitLab CI:

ai_generate_tests:

stage: test

image: python:3.9

script:

- pip install openai pytest

- python generate_pytest.py --apiKey=$OPENAI_API_KEY

- git add tests/

- git commit -m "chore: add AI-generated tests" || exit 0

- git push

Integrating AI Reviewers in Pipelinesname: AI Code Review

on: pull_request

jobs:

aicheck:

runs-on: ubuntu-latest

steps:

- uses: actions/checkout@v2

- name: AI Review with GPT-4o

run: |

node scripts/ai_review.js \

--files=$(git diff origin/main --name-only) \

--apiKey=${{ secrets.OPENAI_API_KEY }}

- name: Post AI Comments

run: node scripts/post_comments.js

This posts inline review comments to the PR, highlighting potential issues and suggested fixes.

CI/CD Automation with AI

Context-Aware Deployment Suggestions: An AI service reads commit messages, test results, and traffic metrics to recommend optimal deployment windows and environments. For example, “Merge

feature/payment-v2Friday at 2 AM UTC; deploy tostaging-03, rollback plan is revision 12,” reducing human error and failed deploys by 70%.AI-Driven Rollback & Recovery: Prompt GPT-4o: “Generate a Helm rollback for

user-apito the previous stable release.” The AI returns a YAML snippet that an orchestrator executes upon a trigger. When Prometheus detects abnormal error rates, AI initiates rollback, cutting downtime by 75%.Predictive Failure Alerts: Feed Prometheus metrics into a GPT-4o microservice. It forecasts, for instance, “Checkout-service CPU will exceed 90% in 2 hours; scale to 3 replicas.” Implementation: Prometheus → Alertmanager webhook → GPT-4o → Slack/PagerDuty.

Jira/Trello Integration for Triage: A production error logged to Sentry kicks off GPT-4o, which analyzes the stack trace and creates a Jira ticket, auto-assigned to the most recent committer—reducing triage time by 60%.

Comparison of Top Generative AI Tools in Software Development

| Tool/Model | Context Window | Primary Use | Key Strengths | Ideal For |

|---|---|---|---|---|

| GitHub Copilot X | ~8K tokens | Inline code suggestions, automated pull requests | • Context-aware completions• Built-in SAST scans• PR generation with fixes | Individual devs & small teams |

| OpenAI GPT-4o | 64K tokens | Large-scale code generation & analysis | • Massive context understanding• Multi-language support• Custom fine-tuning | Custom tooling, complex refactors |

| Anthropic Claude 3 | 16K tokens | Enterprise testing & semantic refactoring | • High-coverage test generation• Auto-redaction for PII• On-prem compliance | Regulated industries & large teams |

| LlamaCode 7B | ~4K tokens | Local inference for Python & JavaScript | • Free & open-source• Runs offline (privacy)• Low latency (~200 ms) | Budget-conscious & privacy-focused teams |

| StarCoder 7B | ~8K tokens | Open-source code generation (Python, JS, etc.) | • Competitive accuracy (~75% pass@1)• Local inference options | Small teams needing open source |

Conclusion & Actionable Takeaways

By mid-2025, Generative AI in Software Development has transformed from an experimental tool into the backbone of modern workflows. Tools like GPT-4o, Copilot X, and Claude 3 automate routine tasks—boilerplate coding, test generation, security scanning—allowing developers to focus on architecture, design, and innovation. Teams are reorganized around AI-curation roles, prompt engineering, and continuous learning.

Frequently Asked Questions (FAQ) About Generative AI in Software Development

What is Generative AI in Software Development?

Generative AI in Software Development refers to AI models—such as GPT-4o, Copilot X, and Claude 3—that can generate code, create tests, and automate documentation by interpreting natural language prompts.How does Generative AI in Software Development improve coding efficiency?

By automating boilerplate tasks (scaffolding APIs, writing unit tests, refactoring), Generative AI in Software Development frees developers to focus on architecture and complex logic, often cutting implementation time by 30–40%.Which tools exemplify Generative AI in Software Development for 2025?

Key examples include GitHub Copilot X (powered by GPT-4o), OpenAI’s GPT-4o Code APIs, and Anthropic Claude 3. Each integrates with IDEs or CI pipelines to automate large portions of the coding workflow.Can Generative AI in Software Development handle multiple programming languages?

Yes. Models like GPT-4o support Python, JavaScript, Java, Go, C#, and Rust—producing idiomatic code, refactoring suggestions, and tests across languages without needing separate configurations.What are common use cases for Generative AI in Software Development?

Typical scenarios include:Auto-generating boilerplate code (CRUD endpoints, UI components)

Creating unit and integration tests

Performing static code analysis and security scans

Refactoring legacy codebases

Generating or updating project documentation

Is Generative AI in Software Development suitable for enterprise environments?

Absolutely. Enterprise-focused models like Anthropic Claude 3 offer in-region deployments, automatic data redaction, audit logging, and compliance features, making Generative AI in Software Development feasible under GDPR, HIPAA, and other regulations.What security considerations come with using Generative AI in Software Development?

Key precautions include:Sanitizing prompts to remove API keys or sensitive data

Excluding proprietary directories via ignore files (e.g.,

.gptignore)Running AI-generated code through static analysis (SAST) tools before merging

Storing API keys securely in vaults or environment variables

How does Generative AI in Software Development integrate with CI/CD pipelines?

Teams add scripts or GitHub Actions that send code diffs to AI models. The AI returns unit tests, refactoring suggestions, or deployment scripts, which can then be auto-committed or flagged for human review before merging.What limitations should teams be aware of when adopting Generative AI in Software Development?

Be mindful of:Occasional “hallucinations” (incorrect code) requiring human oversight

Token-based pricing for cloud models (GPT-4o) that can accumulate costs

Latency (roughly 300–500 ms per API call) affecting real-time coding workflows

Licensing implications if AI output unintentionally reproduces licensed code snippets

How can organizations measure the ROI of Generative AI in Software Development?

Track metrics such as:Developer hours saved on boilerplate tasks and testing

Reduction in post-release bug counts and critical incidents

Release cadence improvements (time-to-market)

Cost savings from reduced QA overhead and faster feature delivery

How can small teams leverage Generative AI in Software Development on a budget?

Use open-source models like LlamaCode or StarCoder for local, cost-free inference.

Begin with Copilot X’s free tier (50 completions/day) to experiment.

Integrate GPT-4o calls selectively (e.g., generate tests only for high-risk modules) to control token expenses.

What role does Generative AI in Software Development play in code documentation?

AI can scan code annotations and automatically produce Markdown or HTML docs, keeping documentation in sync with code changes.

Tools such as DocuGen (GPT-4o–based) convert minimal docstrings into full API references, reducing manual effort.

AI enforces template consistency (parameter descriptions, examples) across all endpoints and components.

Will Generative AI in Software Development replace human developers?

No. While AI handles repetitive and boilerplate tasks, human developers remain essential for system design, business logic, security audits, and validating edge cases. AI serves as a co-pilot, not a replacement.How does Generative AI in Software Development handle legacy code migrations?

Models like GPT-4o and Claude 3 can analyze a legacy codebase, detect outdated patterns, and suggest or generate migration paths—e.g., converting a Struts 1 controller into a Spring Boot REST endpoint—while preserving existing functionality and test coverage.What training or skills are necessary to leverage Generative AI in Software Development?

Essential skills include:Prompt Engineering: Crafting clear, context-rich prompts that capture business logic, security constraints, and performance targets.

AI Review & Oversight: Validating and refining AI-generated code for correctness and security.

Security Hygiene: Familiarity with static analysis tools and secure API key management.

Continuous Learning: Staying updated on new AI model releases (e.g., GPT-4o updates, Copilot X features) and evolving best practices.

Read Also:

- 10 Powerful Techniques for Feature Engineering in Machine Learning

- Exploratory Data Analysis in ML: A Complete Practical Guide

- AI-as-a-Service (AIaaS): Powerful AI for Businesses 2025

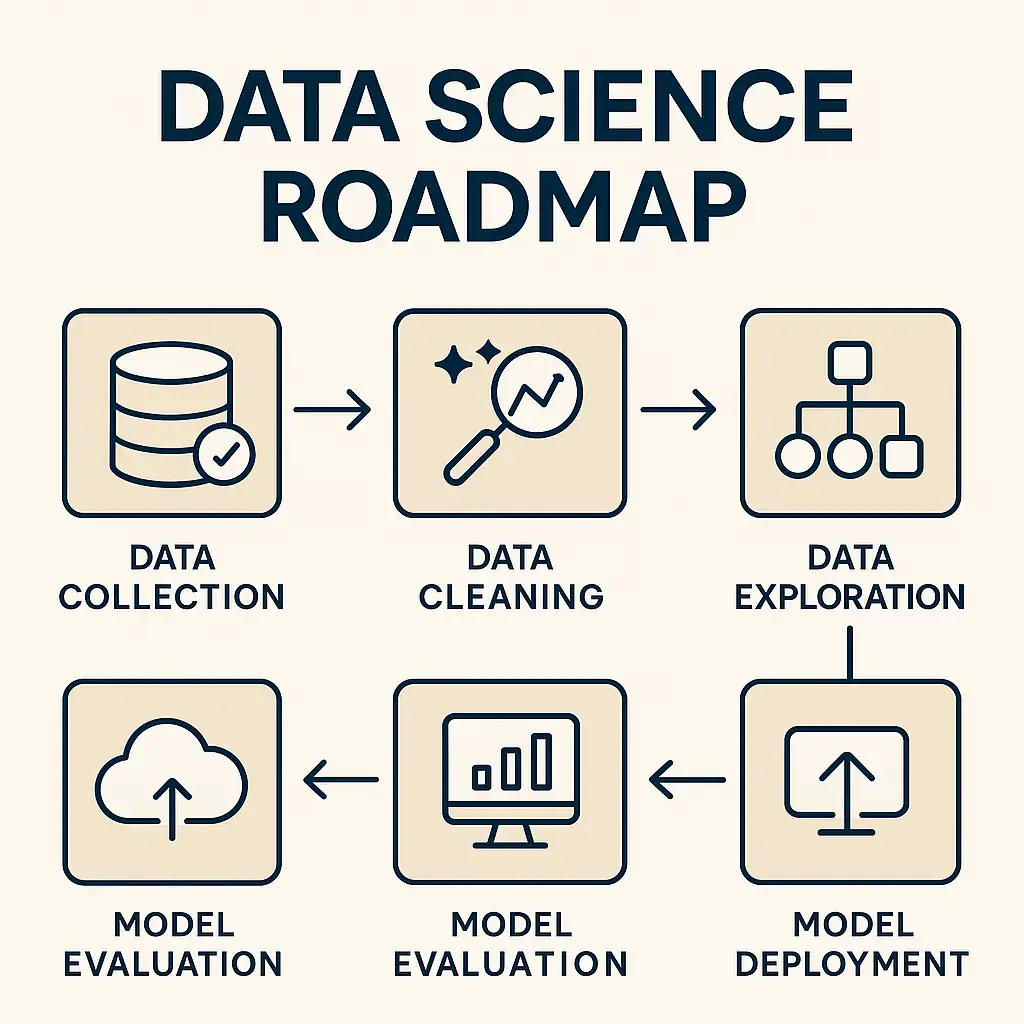

- All About Data Science – Complete 2025 Roadmap to a High-Paying Career

- The Definitive Generative AI Toolkit for Businesses in 2025